Building Machine Learning Pipelines - Introduction - Part 1

What needs to happen to move your machine learning model from an experiment to a robust production system?

The key benefit of machine learning pipelines lies in automating the model life cycle steps. When new training data becomes available, a workflow that includes data validation, preprocessing, model training, analysis, and deployment should be triggered.

Many data science teams still manually handle these steps, which is both costly and prone to errors. Implementing machine learning pipelines can streamline workflows, improve efficiency, and reduce mistakes.

Let’s explore some key benefits of using ML pipelines:

Focus on Innovation – Data scientists can concentrate on developing new models instead of maintaining existing ones, leading to higher job satisfaction and retention.

Bug Prevention – Automated workflows ensure consistency between preprocessing and training, avoiding errors caused by manual updates.

Paper Trail – Experiment tracking and model release management document changes, aiding reproducibility, debugging, and compliance.

Standardization – Consistent setups streamline onboarding, enhance collaboration, and improve efficiency.

Business Impact – Automation reduces costs, simplifies updates, and enables faster model reproduction. Additional benefits include:

Bias detection in data and models.

Compliance support (e.g., GDPR).

Increased development time for data scientists.

When to Think About Machine Learning Pipelines

Machine learning pipelines offer a lot of benefits, but they’re not always necessary. If you're just experimenting with a new model, testing a new architecture, or trying to reproduce a recent study, you probably don’t need a full pipeline.

However, once a model has real users—like in an app—it needs regular updates and fine-tuning. This is where pipelines become valuable, helping data scientists keep models up to date without the hassle of manual work.

Pipelines also become more important as projects grow. If you're working with large datasets or need more computing power, they make scaling up easier. Plus, if repeatability matters, automation ensures consistency and keeps a clear record of changes, making the whole process smoother and more reliable.

Steps in a Machine Learning Pipeline

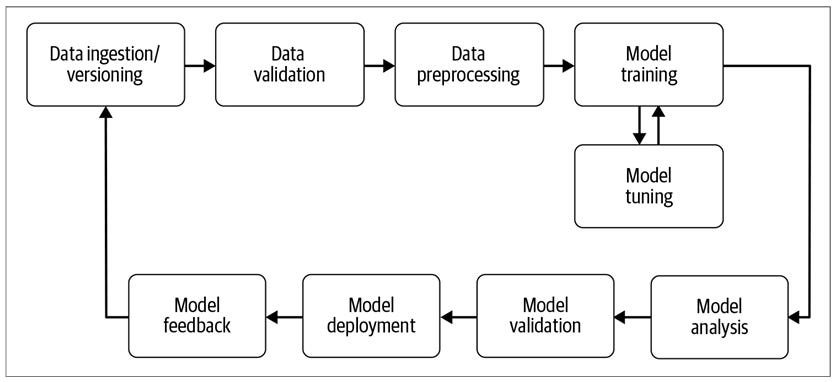

A machine learning pipeline starts by bringing in new training data and ends with feedback on how the model is performing—whether through performance metrics or user input. Along the way, it includes steps like data preprocessing, model training, analysis, and deployment. Doing all of this manually can be tedious and prone to errors.

In this article series, we’ll explore tools and solutions that help automate these steps, making machine learning pipelines more efficient and reliable.

A machine learning pipeline typically consists of several key steps. We will cover each step in a separate article

Data Ingestion and Versioning: This is the first step where raw data is collected and formatted for further processing. Data versioning helps track snapshots of data linked to trained models.

Data Validation: Before training, the new data is checked for anomalies, ensuring its statistics match expectations. This step prevents issues like class imbalance and can halt the pipeline if significant shifts are detected.

Data Preprocessing: Raw data is transformed into a usable format, including label encoding and feature extraction. Changes in preprocessing must be tracked to maintain pipeline integrity.

Model Training and Tuning: The model is trained on the preprocessed data to minimize errors. Hyperparameter tuning is crucial for improving performance, often requiring scalable infrastructure.

Model Analysis: Beyond accuracy, metrics like precision, recall, and AUC are evaluated. Fairness checks and feature dependence analysis help ensure reliable predictions.

Model Versioning: This step tracks changes in model architecture, hyperparameters, and datasets to document improvements. Versioning decisions depend on the impact of data and parameter modifications.

Model Deployment: Modern model servers deploy trained models with minimal manual intervention. APIs enable A/B testing and seamless model updates without affecting applications.

Feedback Loops: Post-deployment, model performance is monitored. New data can be collected to improve future models, either manually or automatically.

Data Privacy: While not traditionally part of ML pipelines, privacy concerns are becoming important. Future pipelines may integrate privacy-preserving techniques to comply with regulations.

Pipeline Orchestration

All the components of a machine learning pipeline described in the previous section need to be executed or, as we say, orchestrated so that the components are being executed in the correct order. Inputs to a component must be computed before a component is executed.

The orchestration of these steps is performed by tools such as Apache Beam, Apache Airflow, or Kubeflow Pipelines for Kubernetes infrastructure. We will discuss these (along with code) in detail in the coming articles.

While data pipeline tools coordinate the machine learning pipeline steps, pipeline artifact stores like the TensorFlow ML MetadataStore capture the outputs of the individual processes. We will provide an overview of TFX’s MetadataStore and look behind the scenes of TFX and its pipeline components.

Why Pipeline Orchestration?

In 2015, a team of machine learning engineers at Google identified a key reason why many ML projects fail—most rely on custom code to connect different pipeline steps. However, this custom code is often project-specific and difficult to reuse.

Their findings were published in the paper “Hidden Technical Debt in Machine Learning Systems,” which highlighted the fragility of such glue code and its lack of scalability. To address this issue, tools like Apache Beam, Apache Airflow, and Kubeflow Pipelines were developed. These tools enable standardized orchestration, reducing reliance on brittle scripts and making ML workflows more scalable and efficient.

Learning a new tool like Beam or Airflow, or using a framework like Kubeflow, might feel like extra work at first. But once you get the hang of it, the time and effort will be worth it. Setting up machine learning infrastructure, like Kubernetes, may take some time, but it makes things run smoother, faster, and with fewer errors in the long run.

Final Thoughts

In this article, we introduced the concept of machine learning pipelines and broke down their key steps. We also highlighted the benefits of automating these processes to save time and reduce errors. In the next article, we’ll dive into building our pipeline!